Benchmarking GPUs for Machine Learning

Machine Learning and neural networks are underpinned by matrix multiplication operations. As a result, GPUs are likely to accelerate the speed with which a model can be trained, and GPUs are in demand in the ML community. HPC facilities in particular may offer powerful GPUs to facilitate this acceleration.

However, the GPUs available on HPCs differ from those available in your local workstation, and it can be unclear which GPUs are likely to best support your work load, or how much better they may be compared to your local GPU. One straight-forward way of benchmarking GPU performance for various ML tasks is with AI-Benchmark. We’ll provide a quick guide in this post.

Background

AI-Benchmark will run 42 tests spanning 19 Machine Learning tasks on your GPU, including:

MobileNet-V2, Classification

Inception-V3, Classification

Inception-V4, Classification

Inception-ResNet-V2, Classification

ResNet-V2-50, Classification

ResNet-V2-152, Classification

VGG-16, Classification

SRCNN 9-5-5, Image-to-Image Mapping

VGG-19, Image-to-Image Mapping

ResNet-SRGAN, Image-to-Image Mapping

ResNet-DPED, Image-to-Image Mapping

U-Net, Image-to-Image Mapping

Nvidia-SPADE, Image-to-Image Mapping

ICNet, Image Segmentation

PSPNet, Image Segmentation

DeepLab, Image Segmentation

Pixel-RNN, Image Inpainting

LSTM, Sentence Sentiment Analysis

GNMT, Text Translation

When the benchmark is complete the output will include results for each individual test, as well as overall training, inference, and AI scores. You can compare your GPU performance with the ranking provided by AI-Benchmark.

Running AI-Benchmark

Setting up AI-Benchmark is straightforward and comes as a Python pip package; we have used a Python virtual environment in our tests. First, you will need to install TensorFlow and the benchmarks:

pip install tensorflow

pip install ai-benchmark

Note: you need to install NVIDIA CUDA and cudNN, and TensorFlow versions older than 1.15 will require you to specify tensorflow-gpu.

Then run the following code in Python:

from ai_benchmark import AIBenchmark

benchmark = AIBenchmark()

results = benchmark.run()

This will output the results, as well as the GPU being detected. Please note that AI-Benchmark is not currently designed to test multi-GPU performance, and additional GPUs aren’t optimised for.

HPC Results

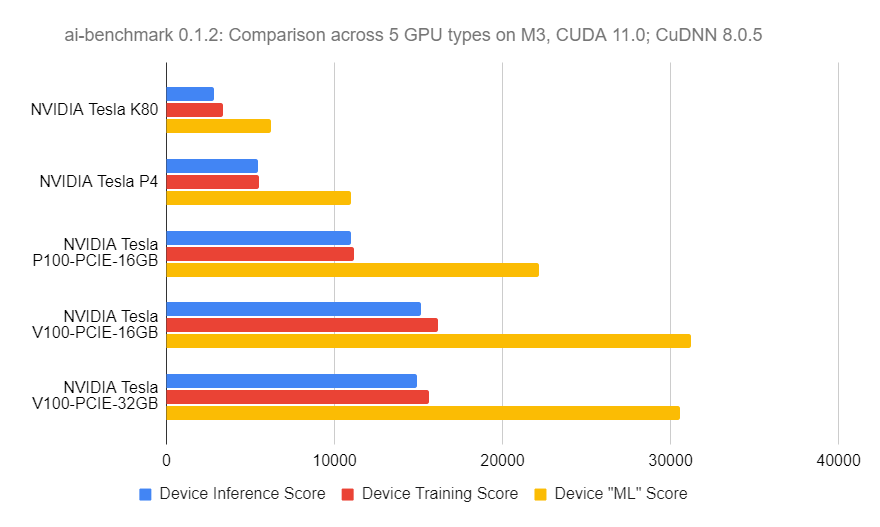

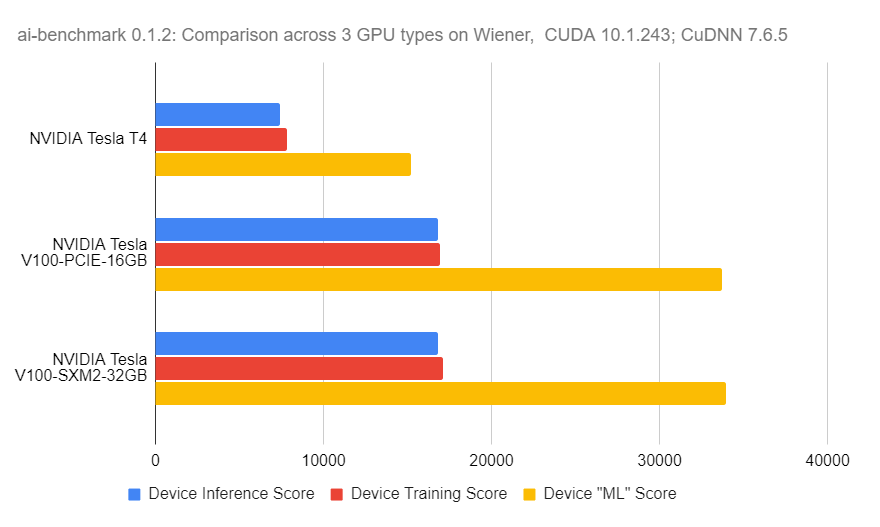

Monash’s MASSIVE M3 and University of Queensland’s Wiener benchmarked their GPUs using AI-Benchmark. These results allow each site to better understand the best hardware to support Machine Learning, and to develop documentation in the future for the community.

Examples on how to run this benchmarking software on MASSIVE M3 can be found in this GitHub repository.

Here are the results for each site.

MASSIVE M3

Wiener